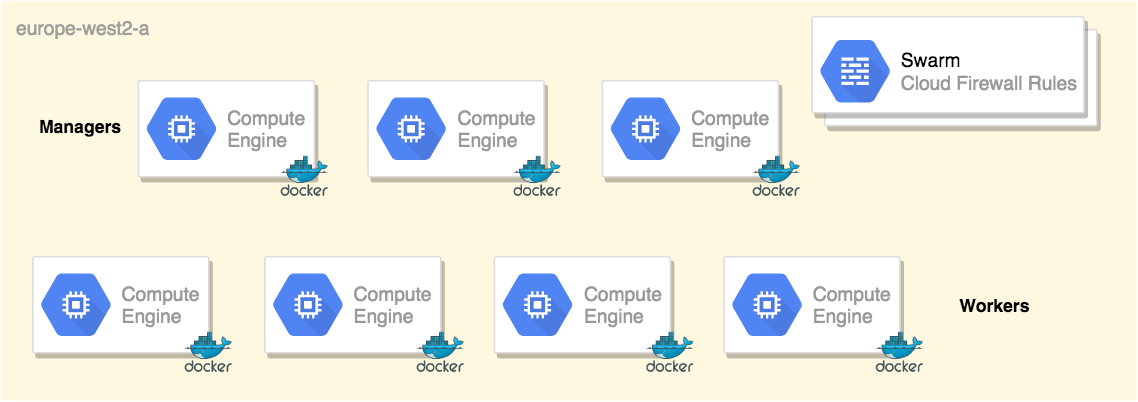

Kubernetes might be the ultimate choice when deploying heavy workloads on Google Cloud Platform. However, Docker Swarm has always been quite popular among developers who prefer fast deployments and simplicity— and among ops who are learning to get comfortable with an orchestrated environment.

In this post, we will walk through how to deploy a Docker Swarm cluster on GCP using Terraform from scratch. Let’s do it!

All the templates and playbooks used in this tutorial, can be found on my GitHub.

Get Started

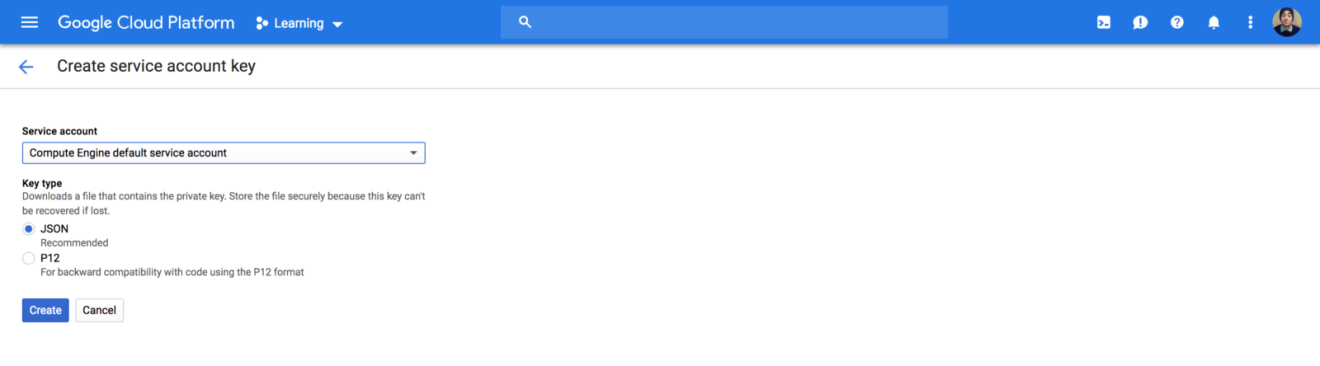

To get started, sign in to your Google Cloud Platform console and create a service account private key from IAM:

Download the JSON file and store it in a secure folder.

For simplicity, I have divided my Swarm cluster components to multiple template files — each file is responsible for creating a specific Google Compute resource.

1. Setup your swarm managers

In this example, I have defined the Docker Swarm managers based on the CoreOS image:

1 | resource "google_compute_instance" "managers" { |

2. Setup your swarm workers

Similarly, a set of Swarm workers based on CoreOS image, and I have used the resource dependencies feature of Terraform to ensure the Swarm managers are deployed first. Please note the usage of depends_on keyword:

1 | resource "google_compute_instance" "workers" { |

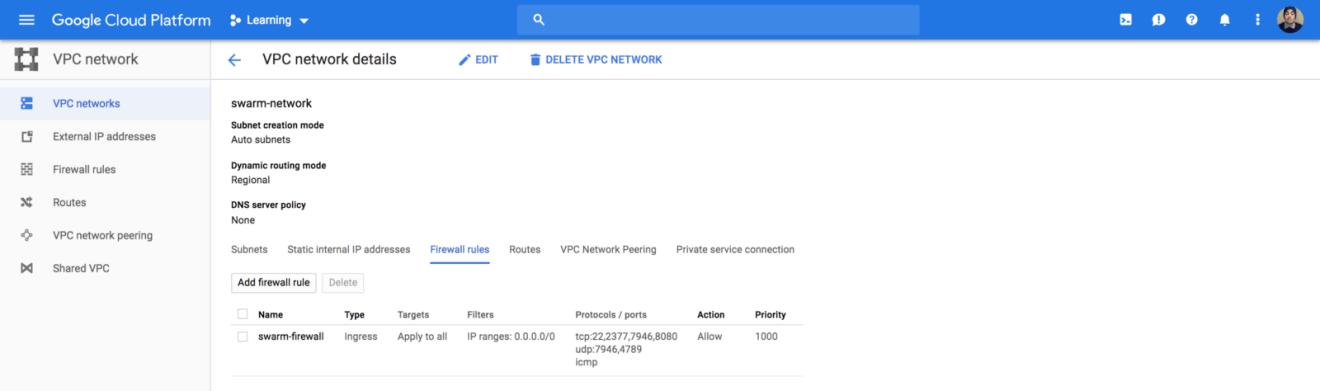

3. Define your network rules

Also, I have defined a network interface with a list of firewall rules that allows inbound traffic for cluster management, raft sync communications, docker overlay network traffic and ssh from anywhere:

1 | resource "google_compute_firewall" "swarm" { |

4. Automate your inventory with Terraform

In order to take automation to the next level, let’s use Terraform template_file data source to generate a dynamic Ansible inventory from Terraform state file:

1 | data "template_file" "inventory" { |

The template file has the following format, and it will be replaced by the Swarm managers and workers IP addresses at runtime:

1 | [managers] |

Finally, let’s define Google Cloud to be the default provider:

1 | provider "google" { |

5. Setup Ansible roles to provision instances

Once the templates are defined, we will use Ansible to provision our instances and turn them to a Swarm cluster. Hence, I created 3 Ansible roles:

- python: as its name implies, it will install Python on the machine. CoreOS ships only with the basics, it’s a minimal linux distribution without much except tools centered around running containers.

- swarm-init: execute the docker swarm init command on the first manager and store the swarm join tokens.

- swarm-join: join the node to the cluster using the token generated previously.

By now, your main playbook will look something like:

1 | --- |

6. Test your configuration

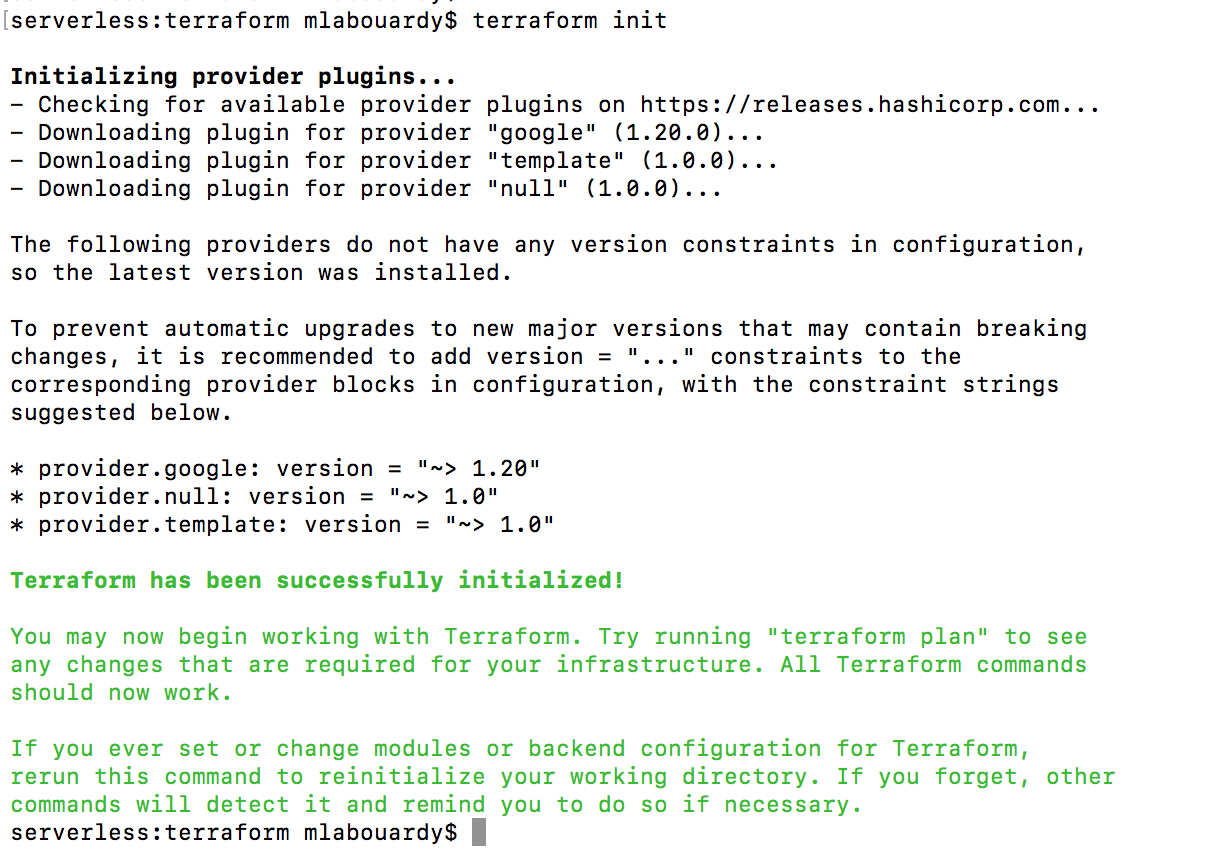

To test it out, open a new terminal session and issue terraform init command to download the google provider:

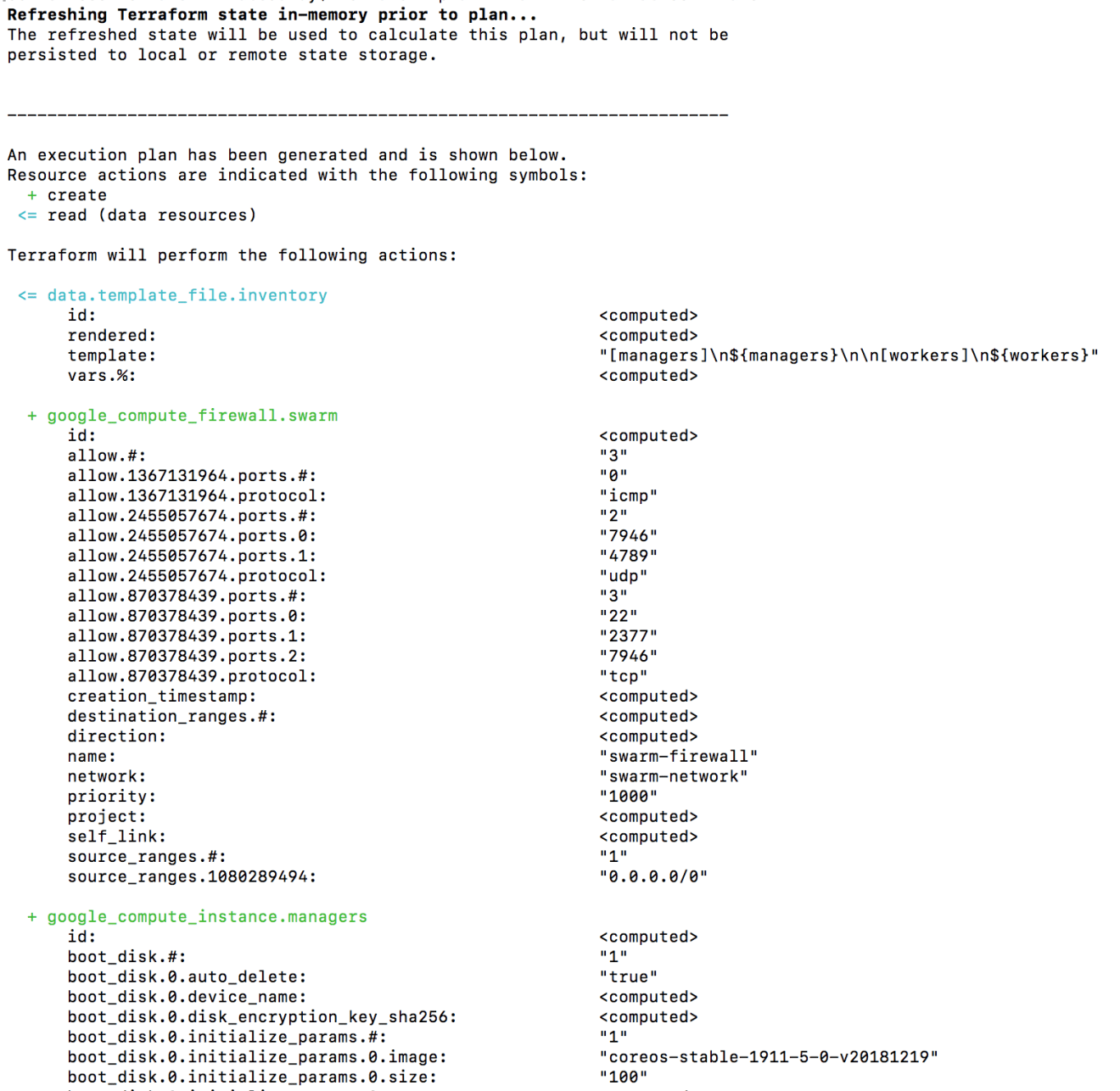

Create an execution plan (dry run) with the terraform plan command. It shows you things that will be created in advance, which is good for debugging and ensuring that you’re not doing anything wrong, as shown in the next screenshot:

You will be able to examine Terraform’s execution plan before you deploy it to GCP. When you’re ready, go ahead and apply the changes by issuing terraform apply command.

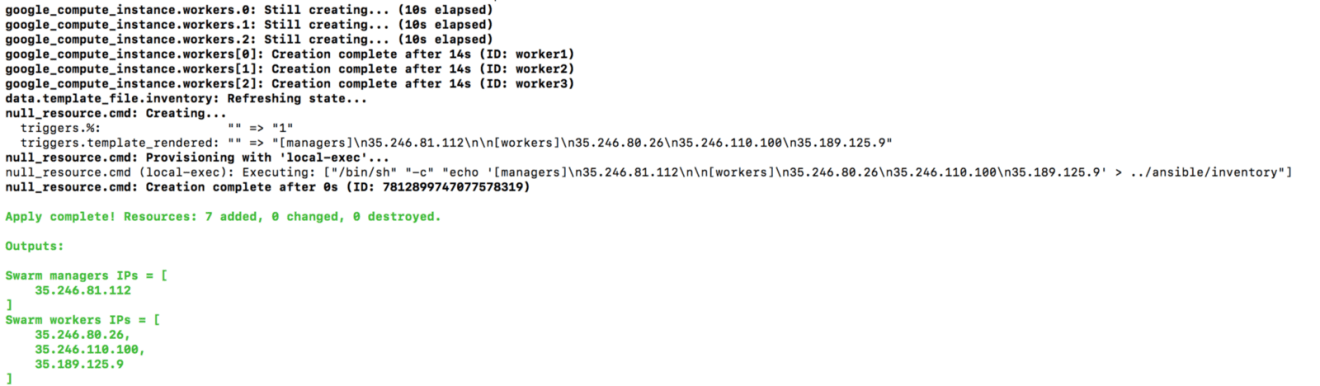

The following output will be displayed (some parts were cropped for brevity):

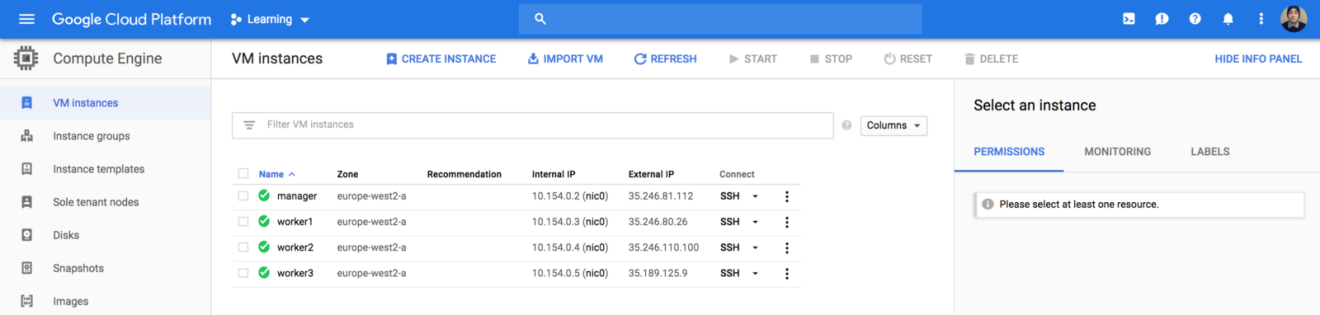

If you head back to Compute Engine Dashboard, your instances should be successfully created:

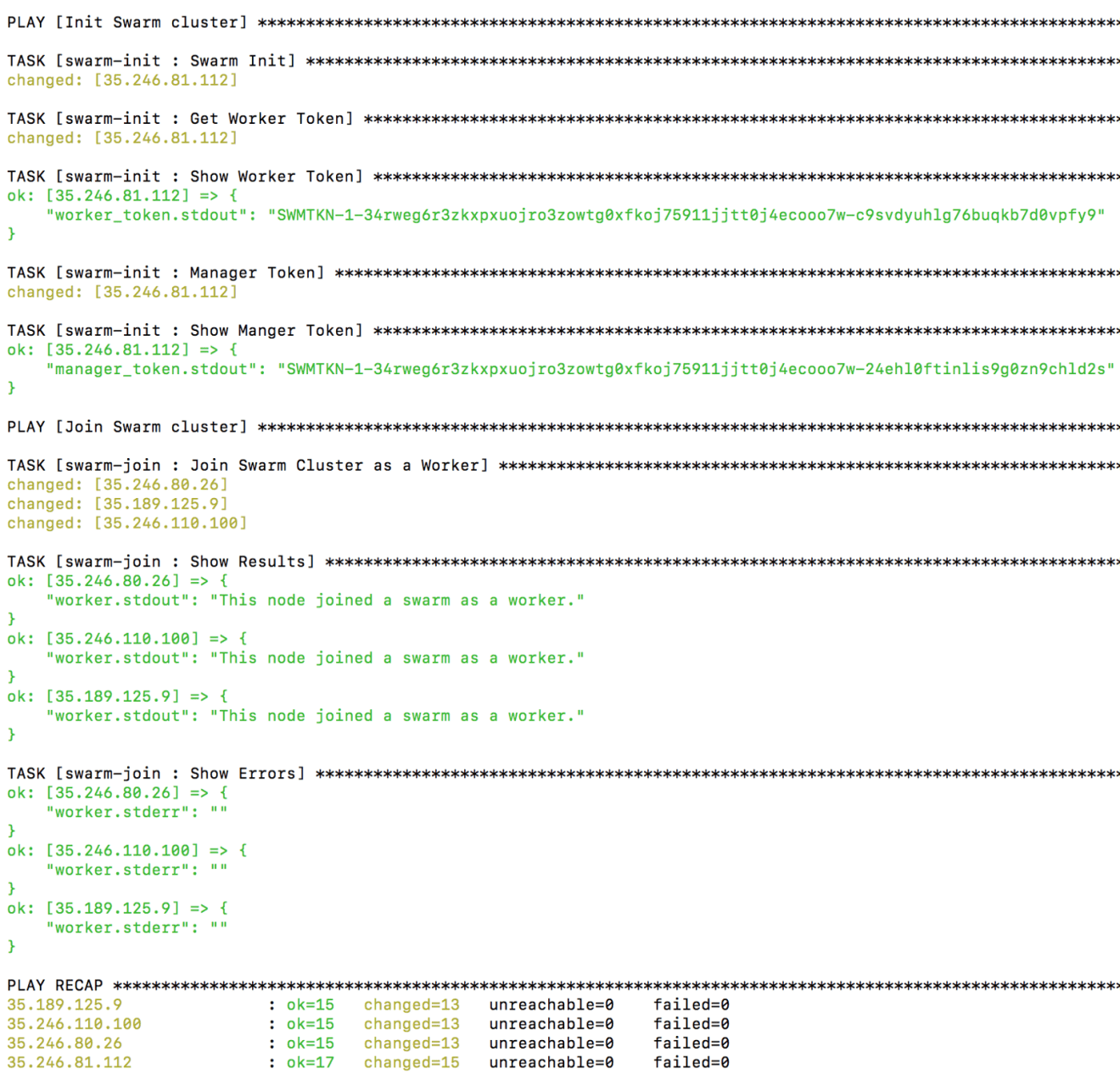

7. Create your Swarm cluster with Ansible

Now our instances are created, we need to turn them to a Swarm cluster with Ansible. Issue the following command:

1 | ansible-playbook -i inventory main.yml |

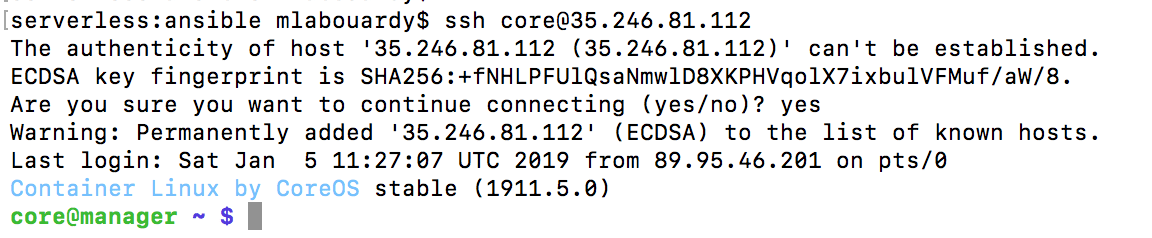

Next, SSH to the manager instance using it’s public IP address:

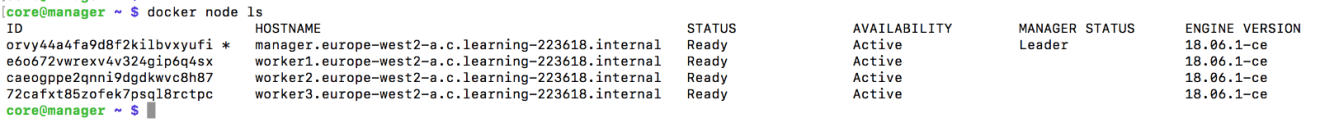

If you run docker node ls, you will get a list of nodes in the swarm:

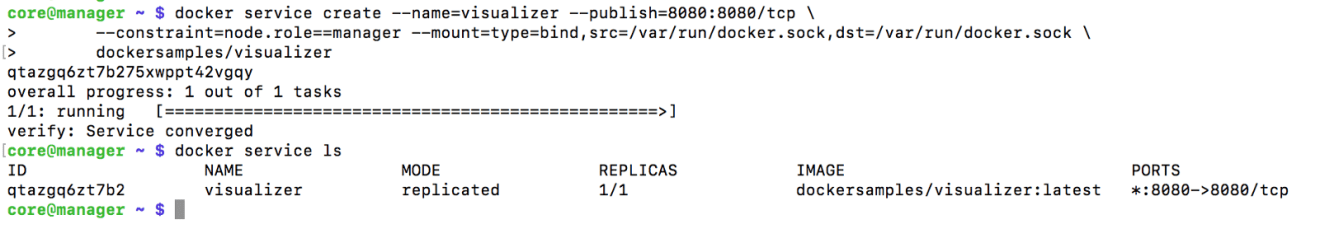

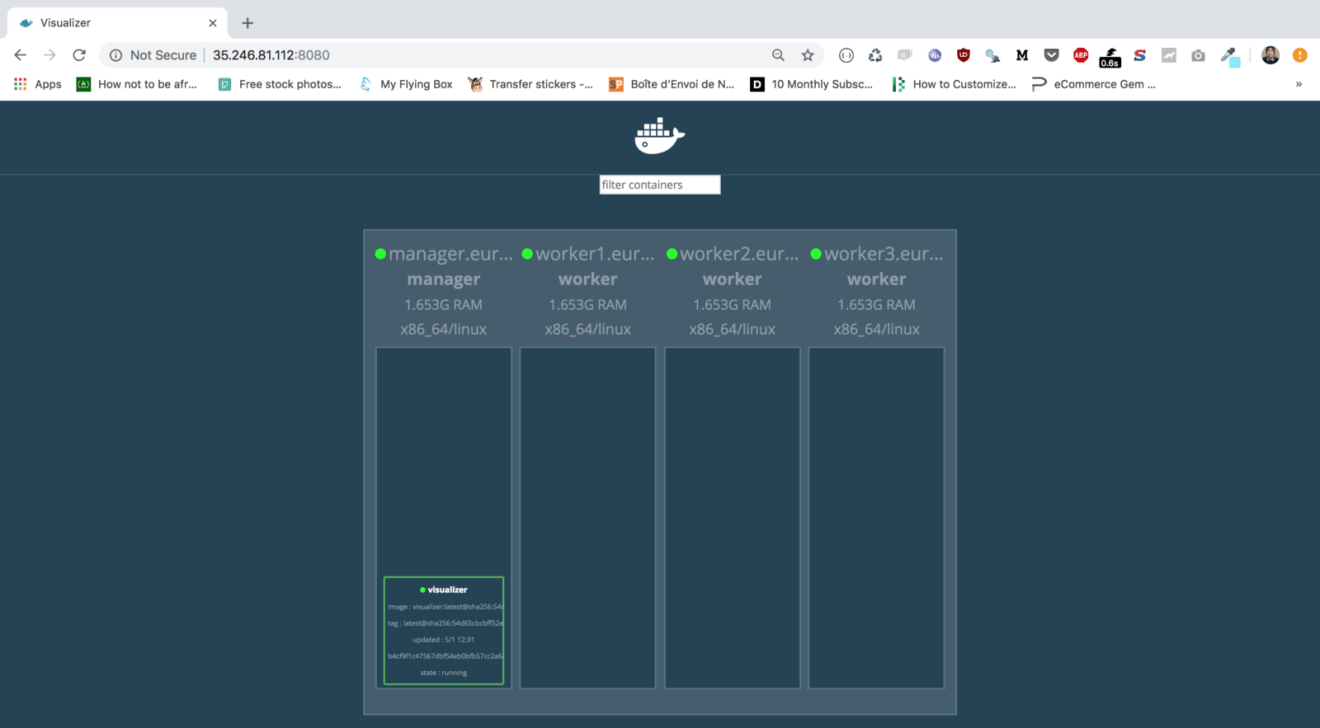

Deploy the visualizer service with the following command:

1 | docker service create --name=visualizer --publish=8080:8080/tcp \ |

8. Update your network rules

The service is exposed on port 8080 of the instance. Therefore, we need to allow inbound traffic on that port, you can use Terraform to update the existing firewall rules:

1 | resource "google_compute_firewall" "swarm" { |

Run terraform apply again to create the new ingress rule, it will detect the changes and ask you to confirm it:

If you point your favorite browser to your http://instance_ip:8080, the following dashboard will be displayed which confirms our cluster is fully setup:

In an upcoming post, we will see how we can take this further by creating a production-ready Swarm cluster on GCP inside a VPC — and how to provision Swarm managers and workers on-demand using instance groups based on increases or decreases in load.

We will also learn how to bake a CoreOS machine image with Python preinstalled with Packer, and how to use Terraform and Jenkins to automate the infrastructure deployment!

Drop your comments, feedback, or suggestions below — or connect with me directly on Twitter @mlabouardy.